Senior Reporter

NTSB Calls Uber’s Fatal Crash a Lesson for All Automated Vehicle Testing

[Stay on top of transportation news: Get TTNews in your inbox.]

A 2018 fatal pedestrian crash involving an Uber Technologies Inc. automated vehicle in Arizona indicates that the challenges of self-driving vehicle operations have not yet been solved, and underscores a lack of sufficient federal and state oversight of developmental automated vehicle testing, investigators with the National Transportation Safety Board said at a Nov. 19 hearing in Washington.

While the NTSB investigation into the accident in Tempe, Ariz., was highly critical of Uber’s automated testing safety culture, it concluded that the probable and contributing causes of the accident ranged from vehicle operator inattention to ineffective oversight of vehicle operators and the pedestrian’s night crossing outside a crosswalk while likely impaired by drugs.

The board issued 19 findings, six probable and contributing causes, and six new safety recommendations on the accident.

“But this crash was not only about an Uber’s test-drive in Arizona,” said NTSB Chairman Robert Sumwalt. “This crash was about testing the development of automated driving systems on public roads. Its lessons should be studied by any company, testing in any state.”

This crash was about testing the development of automated driving systems on public roads. Its lessons should be studied by any company, testing in any state.

NTSB's Robert Sumwalt

Sumwalt added, “For anybody in the automated driving systems space, let me be blunt. Uber is now working on a safety management system. Are you? You can. You do not have to have the crash first.”

While the hearing did not specifically mention trucks, it was clear from the investigators that the challenges of safely testing autonomous vehicles are not limited to cars.

“The role of federal and state agencies is to ensure that the process of this testing is done safely at both levels,” said Bruce Landsberg, NTSB vice chairman. “I’m not sure that we’re seeing that. I think we have to start at the beginning, which means we need to lead at the front, not kind of sit back and say we’ll leave it all up to the manufacturers and the marketing departments.”

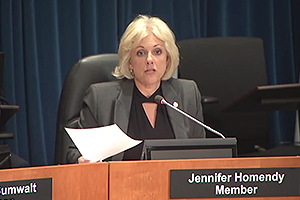

Homendy via NTSB.gov

NTSB board member Jennifer Homendy said that while some states and the National Highway Traffic Safety Administration may be issuing guidance, they are failing to establish standards for the testing of automated vehicles.

“We have a federal government that hasn’t issued safety standards,” Homendy said. “We have 29 states that have — but not really. Some of those states haven’t really issued robust safety standards or testing and deployment standards. That’s why we need some leadership on the federal and state level.”

NTSB engineer Rafael Marshall said post toxicological testing showed the pedestrian killed in the crash had a high concentration of methamphetamine in her blood as well as an inactive metabolite of marijuana.

“The high level of methamphetamine suggested a high level of use which could affect perception and judgment,” Marshall said.

The crash occurred only about 45 minutes after the vehicle operator began her shift, but she was nonetheless inattentive, suffering from what Marshall called “automation complacency,” and watching a video on her smartphone.

‘Inadequate Safety Culture’ Contributed to Uber Automated Test Vehicle Crash; NTSB Calls for Federal Review Process for Automated Vehicle Testing on Public Roads https://t.co/yUgtCnVnkd pic.twitter.com/tNjaps266A — NTSB_Newsroom (@NTSB_Newsroom) November 19, 2019

“During the final three minutes, the vehicle operator looked down 23 different times,” Marshall said. “The operator’s final glance occurred six seconds before impact, and lasted for five seconds. She returned her gaze to the road about one second before impact.”

The driver was the last of the system's overall redundancy since the car’s automatic braking system was turned off and Uber’s developmental automated driving system ultimately was unable to recognize that the obstruction in the road was a pedestrian, the investigation revealed.

But Sumwalt said that Uber has embraced the lessons from the crash, significantly improving its safety culture.

“We encourage them to continue on that journey, and for others in the industry to take note,” Sumwalt said. “Ultimately, it will be the public that accepts or rejects automated driving systems and the testing of such systems on public roads. Any company’s crash affects public confidence. Anybody’s crash is everybody’s crash.”

Want more news? Listen to today's daily briefing: