Defining Driver Responsibility When Trucks Begin to Drive Themselves

Editor's note: An earlier version of this story incorrectly attributed comments by Richard Beyer, Bendix’s vice president of engineering and research and development, to another Bendix executive.

As truck makers and technology developers work toward introducing vehicles with some level of automated driving features in the years ahead, it will be essential for drivers to have a clear understanding of what those systems can and cannot do and how much they can rely on them.

One key concern is that drivers may place too much trust in limited self-driving systems designed with the expectation that the driver will remain attentive, or at least ready to resume control if needed.

In our debut episode of RoadSigns, we ask: What does the move toward autonomy mean for the truck driver? Hear a snippet from Alex Rodrigues, CEO of Embark, above, and get the full program by going to RoadSigns.TTNews.com.

In fact, that risk has persuaded many developers of this technology to push toward higher levels of automation that no longer rely on the driver as a fallback in an emergency.

The misuse of driver-assist features currently available on some passenger cars, as well as a crash involving a self-driving Uber SUV, have illustrated the challenges associated with relying on both a human driver and a computer program to pilot a vehicle.

In April, a British man received a temporary driving ban after he was caught riding in the front passenger seat of his Tesla car while the Autopilot function was engaged and no one was sitting behind the wheel. Tesla’s current Autopilot system is a so-called Level 2 driver-assist feature that can automatically brake, accelerate and keep the vehicle centered in its lane. However, it is not a fully autonomous driving system; it still requires drivers to watch the road and keep their hands on the wheel.

Inattentive drivers also present a risk for the testing of autonomous vehicles.

In March, a self-driving SUV operated by Uber Technologies Inc. struck and killed a woman in Arizona. A widely viewed video of the incident shows that the test driver appeared not to be watching the road when the woman suddenly appeared out of the darkness directly in the car’s path. The crash is under investigation by the National Transportation Safety Board.

Truck makers and technology suppliers that have been investing in the development of driver-assistance and autonomous driving systems as a way to improve road safety also have been wrestling with the question of how drivers should interact with the technology.

Much of the design efforts involve taking into account the potential risks associated with human inattention or system failures.

“There has been debate about what the driver’s reaction is going to be,” said Keith Brandis, vice president for product planning at Volvo Trucks North America, who did not comment directly on the Uber video. “Does he need to interrupt and react quickly or will he somehow become too dependent and lulled into a false sense of security with this level of automation?”

In the immediate future, truck makers and suppliers said they seek to emphasize the safety of autonomous systems ahead of their deployment in test vehicles.

Therefore, the vehicle must be ready to assume better control of the truck than human drivers, even before they are deployed in test environments, said Jason Roycht, a vice president at component and system supplier Bosch North America.

“Without speaking directly about the Uber incident, I can say we don’t want to figure these things out after we already get the vehicles ready,” Roycht said. “The robust method to do this is to fix these problems via simulations and other tests.”

The Question of Driver Intervention

For many developers of self-driving technology, the way to solve the challenge of safely transferring control between the driver and the machine is to bypass it altogether by building systems that no longer require the driver to remain attentive or quickly resume control in an emergency.

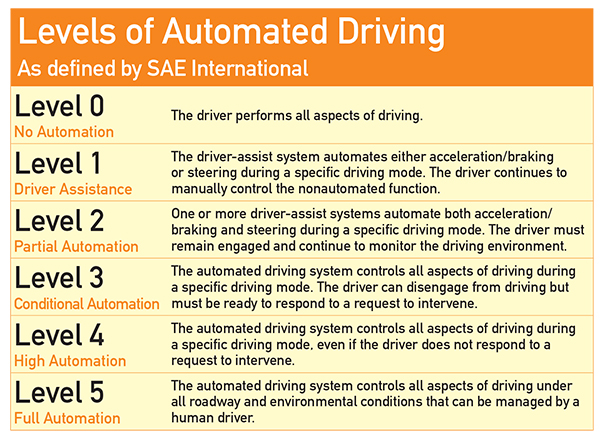

Technology firms Waymo and Uber, along with a number of startups, including Embark, TuSimple, Einride and Starsky Robotics, are developing prototypes of so-called Level 4 automated trucks that can pilot themselves, at least under certain conditions, with no driver input.

That would mean skipping past Level 3 automation, in which the vehicle is capable of driving itself without constant driver supervision but the driver must remain ready to respond to a request to intervene in a timely manner.

iTECH Cover Story

iTECH Cover Story

How drivers and autonomous trucks could work together to move freight

The pursuit of developing autonomous trucks in which control may need to pass unexpectedly from the self-driving program back to the driver is futile and ultimately unsafe, said Kartik Tiwari, co-founder and chief technology officer at Starsky Robotics, a startup that is building self-driving trucks with remote drivers.

“It is critical to define exactly when and where the human is needed ahead of time,” Tiwari said. “Making the blanket statement that the human should intervene if the machine is not working properly is clearly the wrong assumption to make.”

In commercial transportation, fleets only will adopt automated driving technology if the business case makes sense.

“We need to provide a total cost-benefit to the industry, which is the main driver for why the industry will pursue specific levels of automation,” Bosch’s Roycht said.

“Making the case just based on driver comfort hasn’t been there to date,” he added. “The industry definitely sees the business benefit at Level 4 and 5, and so that is why you are seeing the focus there.”

Indeed, specifying when and where the truck will drive itself offers safety benefits and helps to solve the driver hand-off conundrum, said Richard Beyer, vice president of engineering and research and development at Bendix Commercial Vehicle Systems, a major supplier of braking and safety systems.

“You know the driver is out of the loop,” Beyer said. “At a certain point, the system puts the vehicle in a safe condition and says, ‘Now you are in charge.’ That is why you see Level 4 being touted — because Level 3 is not super practical.”

Automation and Productivity

Recent car launches serve as potential counterexamples of where limited Level 3 automation might have a place in trucking.

German premium carmaker Audi’s new A8 offers Level 3 driving on highways at speeds of less than 37.3 mph.

In a commercial truck, such capabilities might allow the driver to be more productive in stop-and-go traffic in metropolitan areas, said industry analyst Richard Bishop, president of Bishop Consulting.

The driver could be able to do tasks unrelated to driving or rest while the truck steers itself for long periods of time in heavy traffic moving at slow speeds. Besides being easier to design compared with the engineering work required for high-speed Level 3 driving, the slower speeds lower safety risks.

“Traffic jams represent conditions that are not too risky for autonomous driving while the driver does other things,” Bishop said. “Carriers could offer to equip cabs with a workspace to do other things when stuck in traffic, for example.”

Downtime in the cab as the truck drives itself in low-speed traffic conditions for extended periods of time also potentially could enhance fleets’ productivity — if that time no longer counted against federal limits on driving hours.

“The bigger and more substantial business case would be if you can get off the clock in terms of hours of service while you’re in that traffic jam,” Bishop said. “That’s a long and winding road with regulators, but that would be nice.”

The case for allowing vehicles to pilot themselves while the driver remains ready to take control at any time, or Level 2 driving, is hard to make for commercial trucks, Bosch’s Roycht said.

Among other things, additional training to teach drivers to overcome the human tendency to place too much faith in the vehicle’s self-driving system likely would not be worth the investment, he said. This would be added to the costs associated with the hardware and software that allow the truck to steer, brake and accelerate on its own while the driver still is responsible for monitoring the vehicle’s progress and traffic conditions.

“The risk and cost associated with Level 2 driving just for a bit of extra driver comfort are a little bit tough to make,” Roycht said. “They are giving a false sense of security to the drivers … while only sort of helping the driver not pay as much attention while he still has a lot of responsibility.”

The Limitations of Self-Driving

Despite the often hyped claims that driverless trucks are just a few years away, even the most advanced prototypes undergoing tests have limitations.

The functionality of truck sensors, for example, still is limited to certain weather conditions.

A truck that can drive itself in sunny conditions may no longer function in a tropical storm or in heavy snow.

This means that even the most advanced autonomous trucks may require driver input at some point, or simply won’t operate in those conditions.

“The Level 4 and Level 5 prototype vehicles are really Level 2, even if the sensors are efficient and intelligent,” Bendix’s Beyer said. “In bad weather conditions, the sensors can literally go blank.”

When systems fail, Starsky Robotics’ Tiwari said human intervention, at least in theory, should not be necessary.

Currently, however, Starsky’s test vehicles give a signal for the driver inside the cab to take over, similar to a Level 3 vehicle. But as the company refines its technology, it expects its trucks eventually will either be able to pull off the road safely, or, in the worst case, gradually come to a stop if no other option is available, he said.

“We don’t want humans to ever have to make decisions during emergency situations,” Tiwari said. “Human response times are inferior, and our systems are designed to make the better call.”

Redundant controls and systems also will be needed to monitor the vehicle and to react to emergency situations as needed.

When the worst does happen and systems fail, trucks’ safety backup systems must be able to respond without input from the driver, said Dan Galves, chief communications officer for Mobileye NV.

“What if the power steering goes out when someone is reading a book and he’s not going to be able to grab the wheel that quickly?” Galves said. “You need to have a secondary braking system and other redundancies to bring you to a safe stop. This becomes expensive but there is certainly a lot of hope from customers for these systems.”

While it’s difficult to predict when various forms of automation will be ready for commercial launch, established truck manufacturers generally have said it will be a while before drivers will be able to completely hand over control to autonomous driving systems for long distances or extended periods of time.

“It’s hard to say what’s going to happen within two to five years … but it’s clear autonomous driving will have value,” Volvo Trucks’ Brandis said. “We are all talking about it and very interested in bringing advanced autonomous driving safety technologies to market.”

Even as this technology advances, drivers will continue to be an essential part of the trucking industry, he said. “Drivers will always remain a key part of logistics operations and will always have a role to play.”